Large Multimodal Models (LMMs) vs Large Language Models (LLMs)

Large multimodal models (LMMs) are a big change because they can handle different types of data like text, images, and audio. But they are complex and need a lot of data, which can be tricky at times. From the start, it was evident that AI would need to be multifunctional and serve as a single platform for various purposes, and LMM exactly is that.

Large Multimodal Models (LMMs) vs Large Language Models (LLMs)

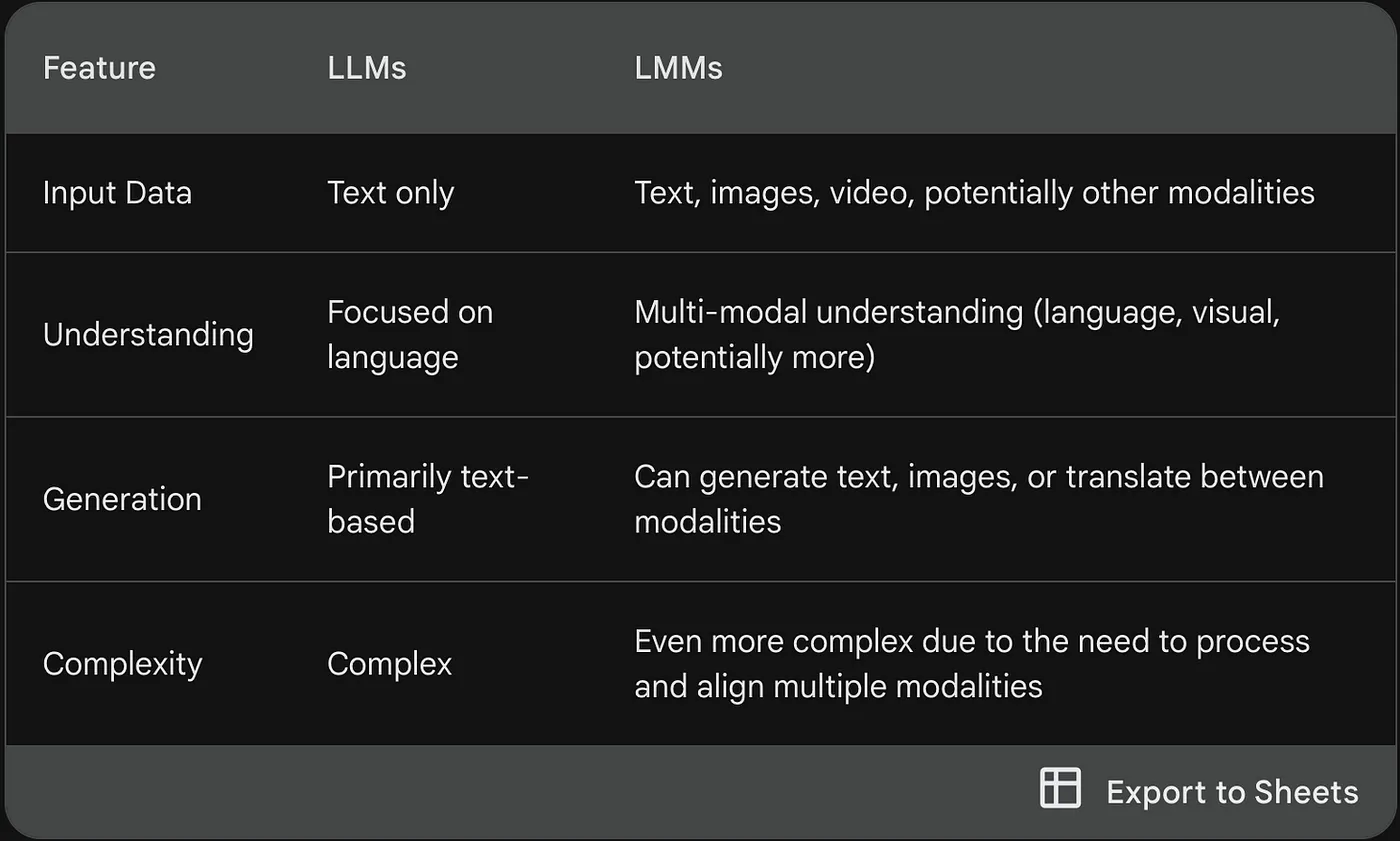

The real difference is in how each model processes data, their specific requirements, and the formats they support.

Large multimodal models (LMMs) are a big change because they can handle different types of data like text, images, and audio. But they are complex and need a lot of data, which can be tricky at times. From the start, it was evident that AI would need to be multifunctional and serve as a single platform for various purposes, and LMM exactly is that.

What is exactly a Large Multimodal Model (LMM)?

A Large Multimodal Model (LMM) is a sophisticated type of artificial intelligence designed to process and make sense of various forms of data at the same time. Unlike traditional AI models, which may be specialized in handling just one kind of input, such as only text — LMMs are capable of integrating multiple types of data, including text, images, audio, and video.

Consider an AI system that can perform several tasks beyond reading and writing text. For example, if you provide this AI with a photograph of a product and also include an audio recording explaining a problem with it, the LMM can analyze both the visual and spoken information together. This combined understanding allows the AI to offer a more accurate and insightful response, considering all available data.

This capacity to handle diverse data types simultaneously makes LMMs especially powerful for complex tasks. In a medical context, for instance, an LMM could review a combination of X-ray images, patient notes, and spoken explanations from healthcare professionals. By integrating these different inputs, the model can assist in providing a more precise diagnosis than if it were only analyzing one type of data.

Moreover, LMMs can produce outputs in various formats, not limited to just text. This means they can generate visual displays, audio feedback, or even video content, depending on the needs of the situation. This versatility makes LMMs highly adaptable and useful across a range of fields, from customer service to multimedia content creation.

While many people might imagine such advanced technologies only from movies or science fiction, LMMs are very real. They show that what once seemed like futuristic ideas are now part of our everyday technology.

How do large multimodal models work?

To understand how they function, it’s helpful to look at their similarities with large language models (LLMs), their diverse training processes, and the methods used to refine their performance.

Training and Design Similarities

LMMs share many similarities with LLMs in terms of design and operation. Both types of models use a transformer architecture, which is a powerful framework that allows them to process and understand complex patterns in data. This architecture helps the models manage large volumes of information and learn intricate relationships between different data points.

Like LLMs, LMMs undergo extensive training using massive datasets and sophisticated reinforcement learning techniques. The main difference is that while LLMs primarily deal with text, LMMs are trained with a variety of data types, including text, images, audio, and video. This broad approach enables LMMs to integrate and make sense of different kinds of information simultaneously.

Training with Diverse Data

The core training process for an LMM involves exposing the model to a vast array of data types. For example, when teaching an LMM about a concept like a “dog,” it is not only given textual descriptions but also shown thousands of images of dogs, provided with audio clips of barking, and given videos showing dogs in action. This multifaceted exposure helps the model understand the concept from multiple angles.

By processing images, the LMM learns to identify visual features such as fur, ears, and tails. Through audio, it learns to associate the sound of barking with the concept of a dog. The goal is for the model to build a comprehensive understanding that integrates these different types of data, allowing it to recognize and respond to the concept of a dog in various contexts.

Fine-Tuning and Refinement

After the initial training, the model undergoes fine-tuning to ensure it performs effectively in practical applications. This phase includes several techniques to improve the model’s performance and safety. Reinforcement learning with human feedback (RLHF) is one method where the model’s outputs are reviewed and adjusted based on human evaluations. Supervisory AI models and red teaming — where experts attempt to find weaknesses in the model — are also used to enhance its reliability.

These refinement processes are crucial for addressing any biases or errors that may have emerged during training. For instance, if an LMM produces biased or inappropriate responses, fine-tuning helps correct these issues and align the model with ethical standards and practical requirements.

Practical Functionality

Once the training and fine-tuning are complete, the result is an LMM capable of processing and understanding a wide range of data types. This enables the model to handle complex tasks that involve integrating multiple inputs, such as analyzing multimedia content or providing comprehensive insights across different data forms. The LMM’s ability to understand text, images, audio, and video makes it a versatile tool for various applications, from content analysis to interactive AI systems.

What are some famous large multimodal models?

1. GPT4o: OpenAI recently released GPT-4o, a multimodal model that offers GPT-4 level performance (or better) at much faster speeds and lower costs. It’s available to ChatGPT Plus and Enterprise users, and its multimodality means you can quickly create and analyze images, interpret data, and have flowing voice conversations with the AI among other tasks. 2. CLIP (Contrastive Language–Image Pretraining) by OpenAI: CLIP can understand images based on natural language. It’s able to categorize images into different groups even if it hasn’t been specifically trained for those categories, by using text descriptions to guide its understanding. 3. Flamingo by DeepMind: Flamingo combines the abilities of understanding both text and images. It can handle tasks that need both types of information, integrating what it reads with what it sees to perform effectively.

![GPUNET Verifiable Exchange: The Next Frontier for $GPU, Nodes and Ecosystem [TEASER]](https://i.ibb.co/Z1JWjN7r/Article-Cover.png)